Discussions about the promise of AI in K-12 education are everywhere. Personalized learning. Instant feedback. Better outcomes at scale. But when it comes to helping students succeed in math, the reality is more complicated — and the solutions are far less mature.

Earlier this year, NewSchools reviewed over 260 proposals for early-stage GenAI math tutoring solutions. This work unfolded against a backdrop of troubling NAEP math scores, growing educator interest in AI tutoring, and continued recognition of high-dosage tutoring as one of the most effective strategies for accelerating learning. At the same time, schools want to expand tutoring to reach more students in more settings, while leaders across sectors are making unprecedented investments in AI in education. Our goal wasn’t to follow the hype. We wanted to see what’s actually being built and identify developers putting quality, evidence, and inclusion at the center of their design.

Here are three takeaways that reflect both the trends we saw and the strategic choices we made.

1. There’s no single foundation model that works best for GenAI math tutoring, and the strongest tools rely on multiple models.

Some teams in the applicant pool rely on a single foundation model to power their tutoring solutions. But no single model does it all, and new ones are released every few months. The strongest developers are building for flexibility, combining multiple models to improve accuracy, cut costs, and deliver timely, relevant feedback that caters to diverse learners. Some layer in knowledge graphs to align with curriculum and learning science, ensuring AI tutoring content is consistent with core instruction and pedagogical best practices. We invested in ventures taking this integrated approach — swapping in better models as they’re released — because their solutions have staying power and adapt to classroom needs.

2. The strongest ideas use AI to enhance human connection, not replace it.

In the broader applicant pool, we saw many tools that position AI as a standalone tutor. But effective tutoring is powered by relationships. The most promising ideas use AI to help tutors prepare more effectively, give teachers real-time insights to better support students, and make student learning more interactive and social. Some make it easier for students to collaborate in small groups or get just-in-time support, even without a tutor present. Others provide tutors with in-session ideas and post-session feedback that can help them continuously improve how they show up for their students. We backed solutions that keep people in the equation and use AI to make tutoring more scalable, effective, and human-centered. The future of AI tutoring isn’t human vs. machine — it’s humans and machines working together.

3. Student motivation is just as important as accuracy, and too many tools overlook it.

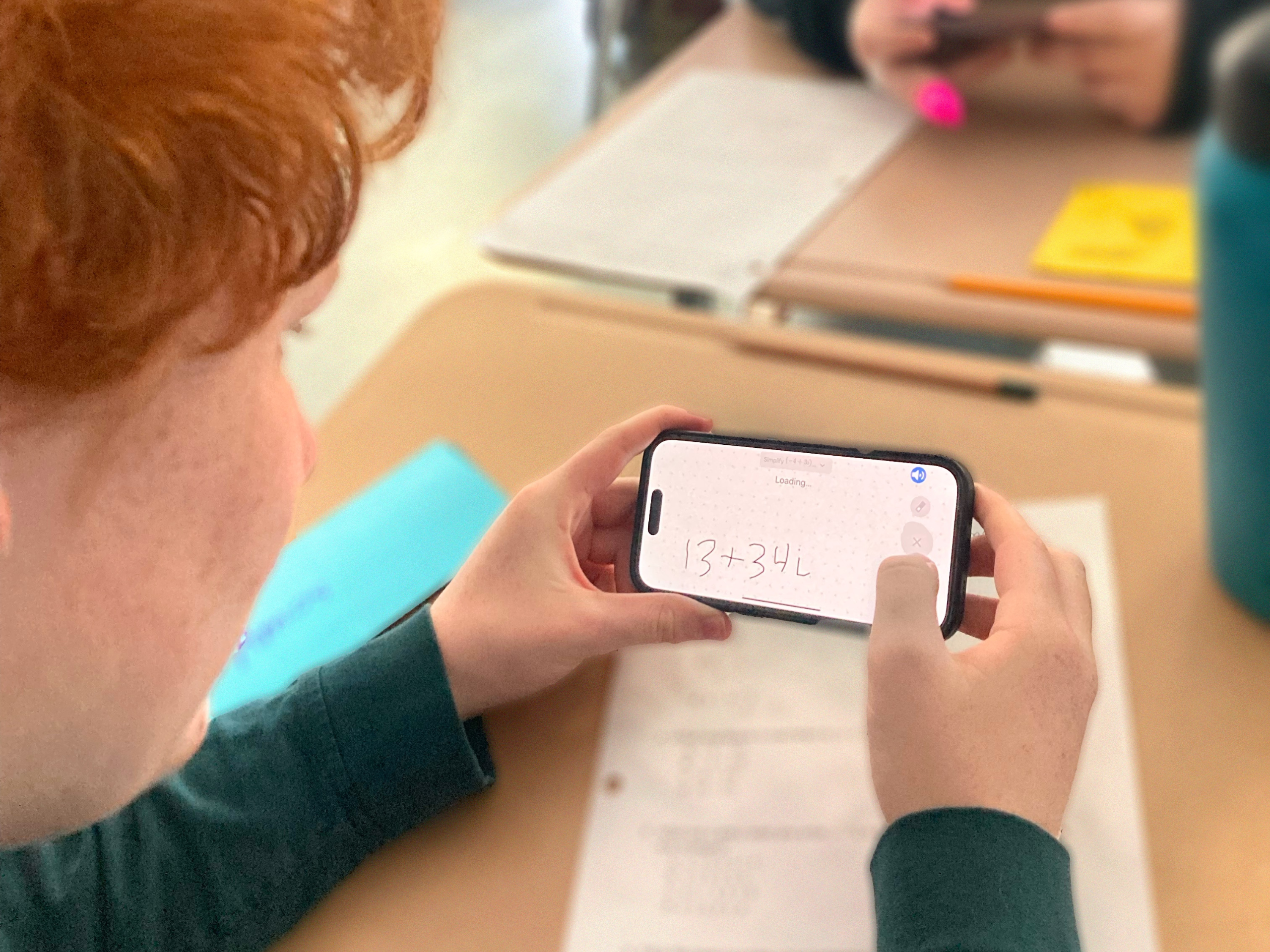

Many solutions focus exclusively on getting the answer right, with little regard for keeping students engaged. That’s a missed opportunity, especially for students who struggle in traditional math settings. We saw a few standout solutions experimenting with strategies to boost motivation, engagement, and persistence. These included multimodal interaction like drawing on digital whiteboards or being prompted to think out loud, AI-enhanced peer-to-peer learning environments, and even “teachable agents” — AI companions that students teach as a way to deepen their thinking. Passive engagement with chatbots is not the frontier of AI in education. We’re supporting ventures that address both what students learn and how they stay connected to learning — because motivation is what drives persistence and long-term success.

Building and Testing What Works

The ventures we’re supporting take different approaches but share a commitment to pairing innovation with inclusion and evidence. What we’ve learned is shaping how we support them, and through research partnerships with Leanlab Education and The Learning Agency, we’re positioned to influence the design of future tools.

This spring, we’ll conduct a pilot with educators and students to study usability, accuracy, engagement, and learning impact. Their feedback will be critical to refining these tools and clarifying when and how GenAI should be woven into math tutoring.

We’re also supporting new benchmarking efforts to set clearer standards. Right now, AI developers have few ways to test whether their solutions align with proven tutoring practices. By building benchmarks around math reasoning, student engagement, and instructional coherence, we can help the field better define what “good” looks like.

Together, classroom evaluations and stronger benchmarks will help us generate early insights on what works best. In the short term, success means students taking more ownership of their learning and tutors becoming more effective, supported by timely, accurate feedback. Over time, this work can give the field the clarity it needs to scale solutions with the greatest impact on math growth.

But AI isn’t a panacea. It’s one piece of a larger puzzle that depends on relationships, instructional coherence, and smart implementation. We’ll get better by collaborating with educators and students to test tools thoughtfully, learn what works, and improve along the way.

We’ll introduce our ventures this fall. For now, we hope these takeaways spark reflection for funders, developers, and education leaders working to build stronger solutions and better outcomes for students.